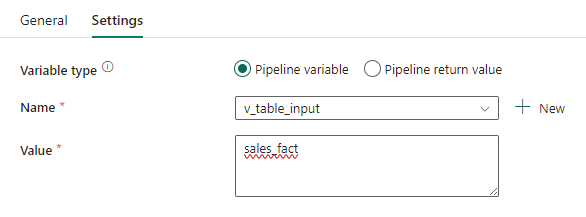

In this small data copy pipeline, the idea is to dynamically set a table name as a variable and execute activities in the consecutive pipeline activities that use this variable to perform the computations. For example, the first two activities that run in parallel, are the variables that set values to be used. v_table_input sets the name of the table to be used throughout the process when creating and loading the specified table into a warehouse. The v_table_copy_input variable sets the name of the Lakehouse delta table, to be used to copy the data from. In this pipeline the data is being copied from a lakehouse delta table to a warehouse table in the dbo schema.

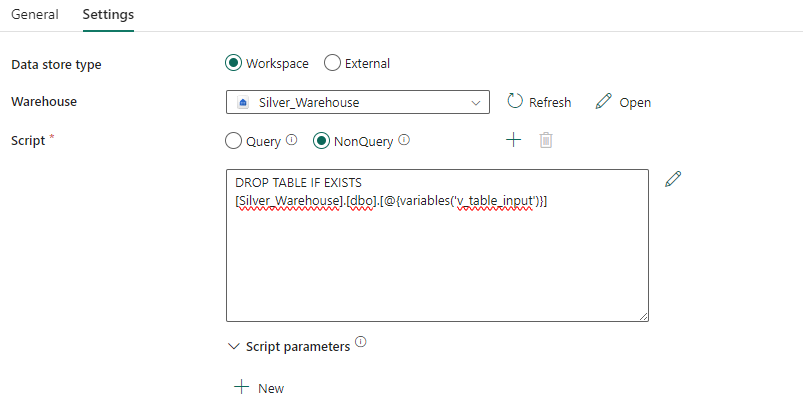

The following step in the pipeline, executing a script, could be set to query, or non-query. If you chose a query you can query a warehouse database as needed. If you chose non-query, you could perform SQL operations such as create table. In this case, I am dropping a table if it exists, which should be performed with caution as data will be removed from the db if it exists in the specified table name. A little bit tricky is adding the variable. You need to add the following code into the SQL script to add the variable string into the warehouse, schema and table name.

Using this syntax: @, you can add the variable which is dynamic in this case, as we can adjust the variable value in the previous step any time we want.

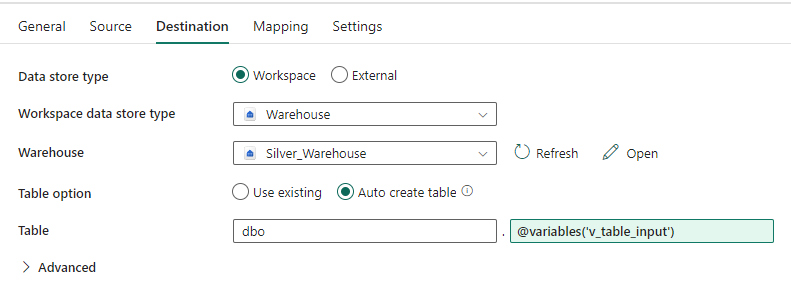

Next, the copy data activity needs a source, from which to get data, and a destination to load the data into. In my case I used the default dbo schema, however you could also use a variable and make this value dynamic. I used the table name variable, to set a table name.

if you hover over the table name with your mouse, an option to set dynamic content will appear. Click that, and a window with a menu will open, where you can navigate to variables, and select the variable you want.

Note: Setting to Auto create table, will either create a new table if non is found (which in my case will always happen because I drop the table with the variable name before. However, if you want to append data to the table, make sure the table name exists, and when set to auto create table, the data will be appended.

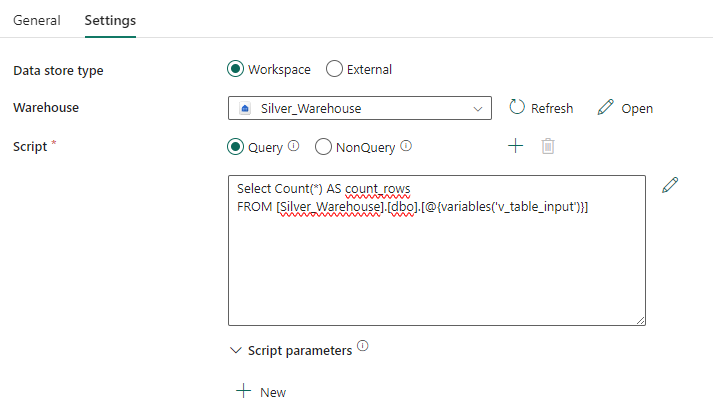

Finally, I run a query that counts the number of rows after the data has been loaded into the new table. I then set that value to a new variable, by using the set variable after the script activity. This is just for practice, of course you can perform more complex queries, summarize the data etc. However the result should be a single value, if you set the variable. It makes life a bit easier. If you query the top 100 rows, for example, the question would be what to do with that later. The output is in the JSON format, as far as I understood, therefore working with this output can be a bit tedious, if not too familiar with JSON.

this time I chose a query, and named the output column count_rows. You need to define a column name that you can use later when calling the value of the first row for setting the next variable.

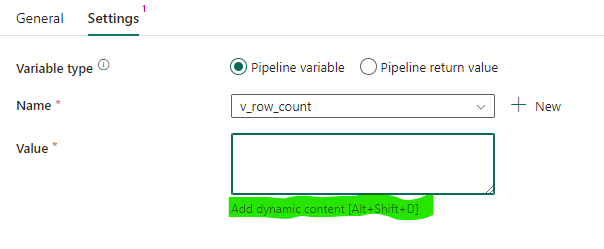

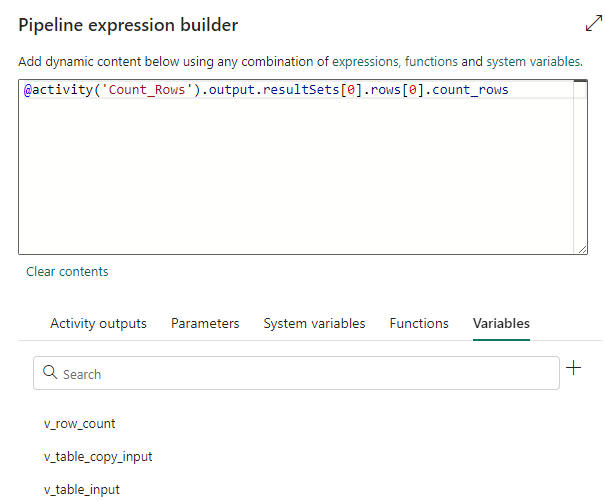

Setting the final variable by using the pipeline expression builder, will require you to define that you want the value from row 0, of the query output previously.

To enter this, hover over the field so that the add dynamic content option appears, click it and paste the code above into the expression builder. Adjust the activity name in the brackets, and the column name at the end of the code.

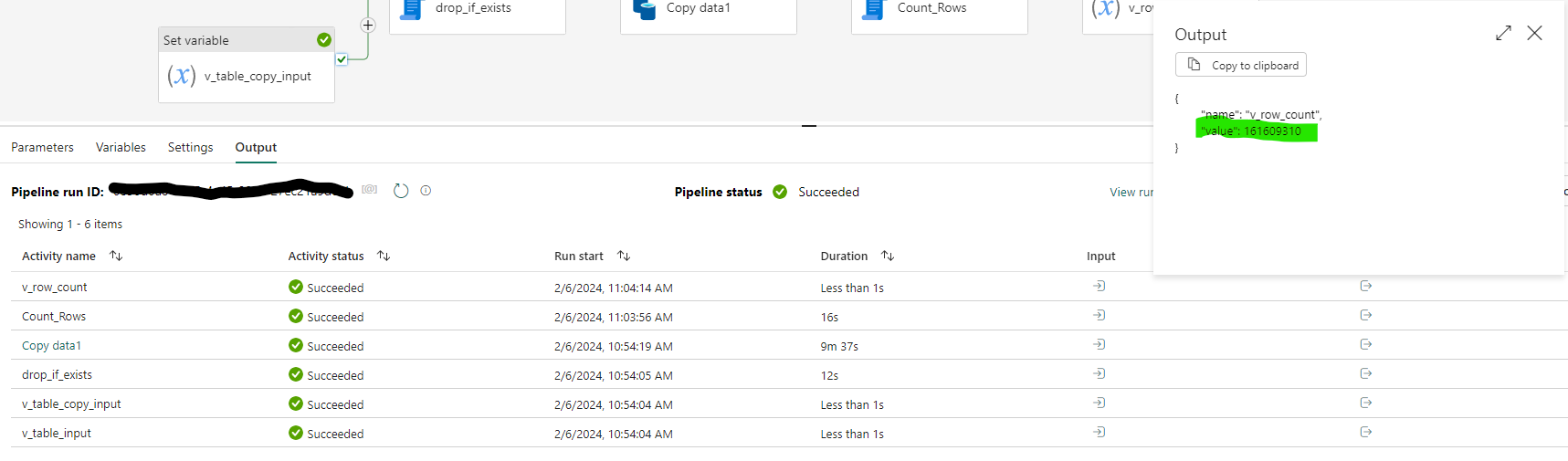

Finally, after running the pipeline successfully you should get something like this.

To see the pipeline execution output, click anywhere on the canvas outside of your activities, and select the output section. You can also check what variables you have, by clicking on the variables section at any time to have an overview or remind yourself of the variables you have available.

Final note: the copy acitvity permits you to copy data from external sources, or from within the same workspace. So in my case, the Lakehouse and the Warehouse are located in the same workspace for this to work.

To summarize, you can change the variable value at a single place, and the pipeline will adjust accordingly at every step. And you can use the output variable as you want.