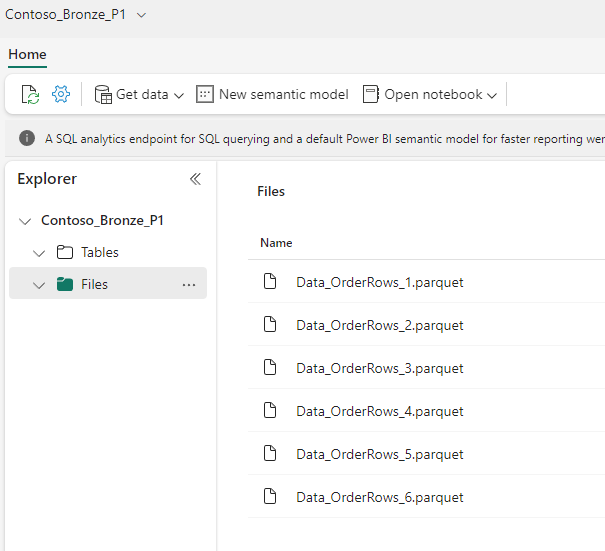

As Microsoft Fabric is offering an analytics engineering certification, I decided to share some of the things I’m learning along the way. I felt particularly motivated when learning about the Lakehouse medallion architecture, as you can automate processes, improve data quality and serve several stakeholders at the same time. For this article I have uploaded the Contoso parquet files for the sales fact table, without making too many transformations between the bronze and silver layer. In the article image illustration, you can see that there are several ways to get data into the OneLake of Microsoft Fabric, however I have simply uploaded the files manually, into a bronze layer lakehouse, sitting inside the bronze workspace I created, and linked to a dedicated bronze Fabric domain. I believe the domain will serve a bigger purpose further down the stream, in regards to data ownership and data governance and security. The bronze layer Lakehouse, holds the raw data, without any tranformation whatsoever. The data is saved as the parquet file format.

To start, I made a plan, on what transformations are necessary, for the files in the bronze layer to successfully load into a single sales_fact delta table in the silver layer.

The first decision I made, is to save the data as a managed delta table, as I experienced difficulties moving and locating external delta tables throughout the Fabric environment.

However, this would mean that if the delta table is deleted, the files inside the SQL Endpont DB also dissapear. If you delete an external table, you will still see the files holding the data located in the files section of the lakehouse. So there theoretically would be an additional security layer. Also a note regarding the external delta table: it will not appear in the SQL Endpoint by default.

The use case for the data transformation looks as follows:

- Sales fact raw data in bronze Lakehouse layer and workspace (parquet files with numeration). These files need to be combined to a single table.

- Silver Lakehouse layer in the silver workspace is the load and transform destination.

- We have several parquet files that need to be combined and transformed into a single delta table in the Silver Lakehouse layer.

- We need an additional code, that checks for new files in the bronze layer, and combines it with the existing data in the silver Lakehouse layer automatically.

- We need an indicator, of what the last added data files were, to determine which files are the new ones to be combined with the existing data.

- We want the result to be an external Delta table, and a managed Delta table and explore the benefits and limitations between the two.

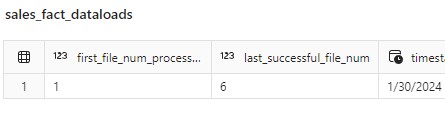

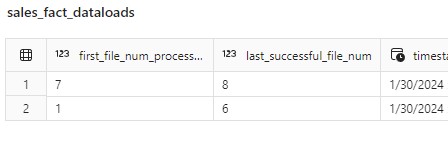

In order to achieve this, we have to save information about the last data load and transformation somewhere. I chose to save it in a separat sales_fact_dataloads table, to keep track of the past data load and see what the previous loads were.

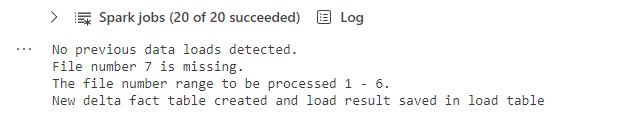

Assumptions: I have to assume a few things for this code, the first is that the file numbering in the raw layer lakehouse are incrementing, as data is added, without any gaps in the numbering. A loop that skips gaps and continues counting up (i), whilst also keeping track of missing files count, proved to be too difficult at this time. Instead a loop that simply tracks the next missing file number and stops there, was good enough.

Also note in the illustration, that we are using an ELT process, extract, load and then transform, for the medallion architecture.

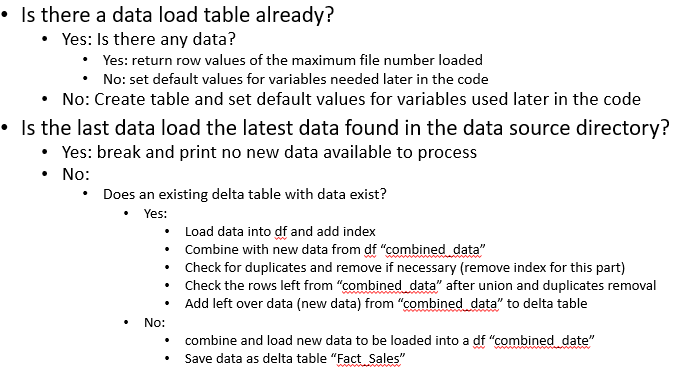

The conditions for the lakehouse notebook data load and tranformation, would look as follows:

This is how the data looks in the bronze lakehouse layer:

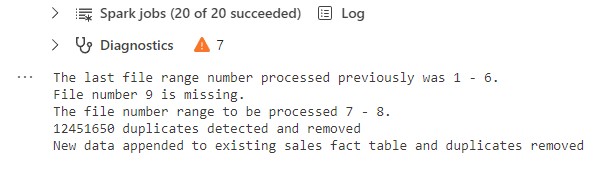

I tried the code with a few scenarios; with one file missing in between the file numbering, adding a new file to the bunch with correct numbering, adding a file that holds duplicates or previously loaded data, but with the correct numbering. Do note: this code does not check the delta table location, and compare it to all the files in the source library. It orientates itself using the file numeration, and checks if there is a new file.

The code in the end looks like this:

The code does the following in steps:

It checks if there is the data_loads delta table, if there is none, create it.

Then it sets default variables, either from an existing data_loads table or other default values if there has been no data load before (it is the first data load).

Then it loops through the file numeration, counting up 1 step at a time, and checking if there are missing files (for example if the last file number is 7, 8 would come out as a missing file number). Then it combines the data in a df that has been found and performs minor transformations. It adds an index for example and replaces blanks in the column names with underscores.

It then loads the existing (if there is any) data from the sales_fact delta table into a seperate df, and combines it with the combined data from the loop.

Then we check for duplicates, and remove them accordingly, separate the new data from the old data using the index, remove the index in another df and then append the data to the existing delta table.

If there was no previous data, then we check for and remove duplicates, and create the new delta table with the data, and save the data load information in the separate data_loads delta table.

This is the output, of a first successful dataload:

This is the output, when new data with duplicates is added, but not all data are duplicates:

I hope this helps when data dumping into a location occurs (with a coordinated file naming), and perhaps you need to combine the data and load it. I’m sure the code can be improved, extended or limited, I am currently learning this myself.